Send events to Lumi

AI summary

About AI summaries.

In the observability landscape, events are the building blocks that help you understand the state of your system at any given moment. Sending events is an integral step in the event lifecycle, after which you can parse and transform your data and explore it with various queries.

Imply Lumi is an observability warehouse that can integrate with your existing tooling and workflow. Whether you use Lumi as a standalone product or alongside your current setup, consider the following aspects when you send events to Lumi:

- Forwarding agent and communication protocol that fits your infrastructure

- Types of events and data structures you have

- Any transformations or filtering needed for incoming events

Ingestion integrations

You can send events to Lumi through various ingestion integrations. The integration you select depends on your application, performance, and security requirements.

Ingestion protocol strategy

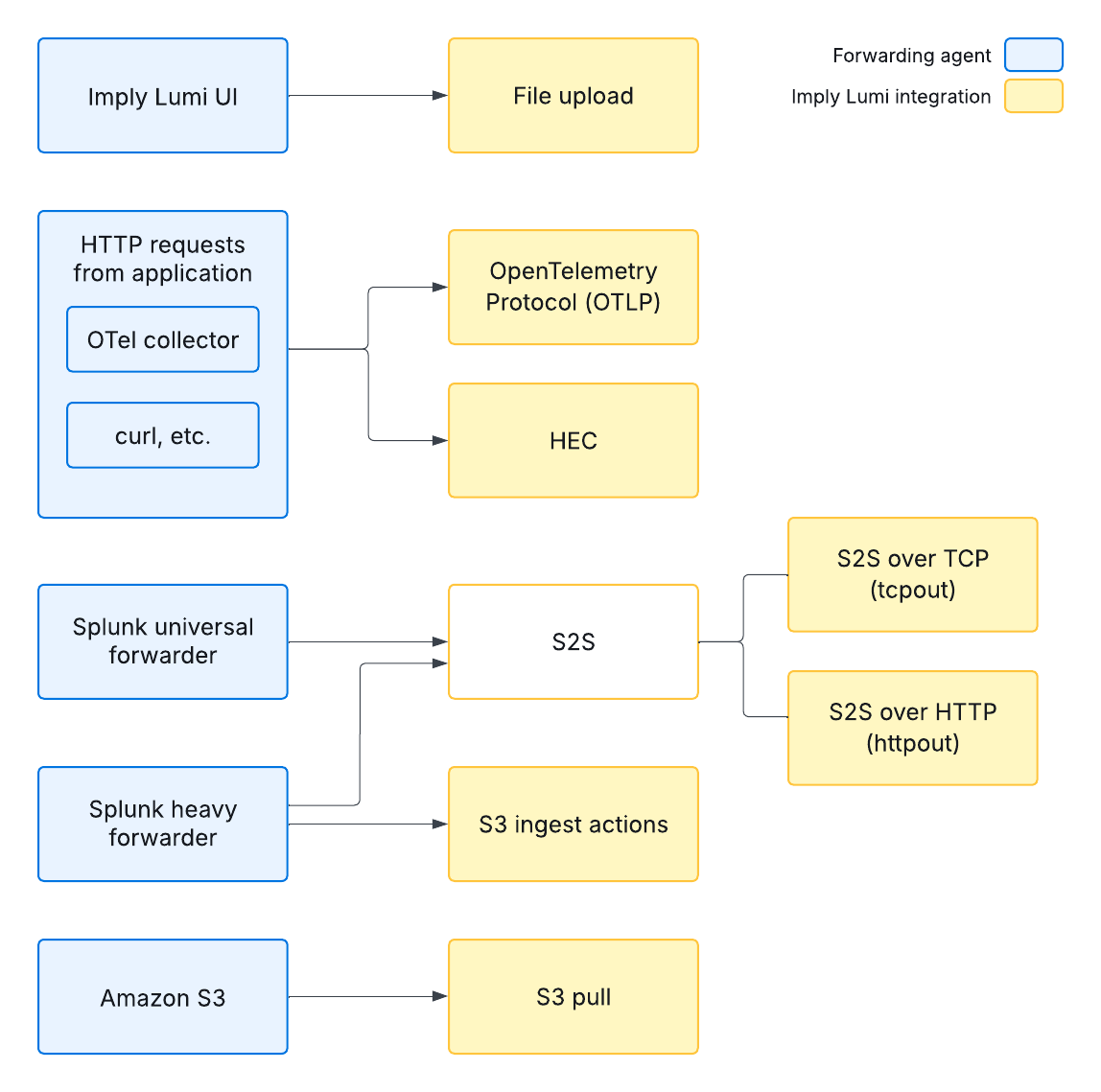

The specific integration you use to send events to Lumi depends on your use case and event forwarding infrastructure. This section presents high-level guidance on which protocol may be suitable for your observability application.

- To get started quickly, upload a CSV or JSON file to Lumi.

- To send events using HTTP requests, such as through the OpenTelemetry Collector:

- For data in the OpenTelemetry Protocol (OTLP): use the OTLP HTTP integration.

- For structured JSON data: use HTTP event collector (HEC).

- If you send events to Splunk® using universal forwarders, configure Splunk-to-Splunk to send events.

- If you send events to Splunk® using heavy forwarders, you can use the following integrations to send events:

- Splunk ingest action to route to S3 (recommended)

- Splunk-to-Splunk

- To ingest events from objects in an Amazon S3 bucket, use the S3 pull integration.

To learn about Lumi as it relates to the Splunk ecosystem, see Lumi concepts for Splunk users.

The following diagram summarizes these strategies:

Event transformation

To transform events in Lumi, you create a pipeline and define processors as components in the pipeline. You can enrich incoming events with relevant metadata, extract key features from structured formats, or perform other processing tasks. For more information, see Transform events.

Learn more

For tutorials on the send methods, see the following topics:

- How to send events with the OTel collector

- How to send events with an S3 ingest action

- How to send events with S2S

See the Quickstart and File upload reference for details on uploading files to Lumi.

📄️ Send events with OTLP

Learn how to send telemetry data to Imply Lumi using the OpenTelemetry Protocol. Configure the OTLP integration with an OTel collector or direct API calls.

📄️ Send events with Splunk HEC

Learn how to send events to Imply Lumi using Splunk® HTTP Event Collector with OpenTelemetry, curl, or any HTTP-compatible application.

🗃️ Send events with S2S

Learn how to forward events from Splunk® universal or heavy forwarders to Imply Lumi using S2S protocol with TCP or HTTP output configurations.

🗃️ Send events with S3 pull

Learn how to send events from Amazon S3 to Imply Lumi. Configure AWS permissions, IAM roles, and handle encrypted data.

📄️ Send events with S3 routing

Learn how to route events from Splunk® to Imply Lumi using S3 ingest actions. Follow a step-by-step setup to configure event forwarding from heavy forwarders.

📄️ Index user attribute

Learn about how to assign the index user attribute in Imply Lumi.

📄️ File upload reference

Learn how to upload log files to Imply Lumi. Includes requirements for CSV and JSON formats, file size limits, and managing event attributes effectively.

📄️ File formats

Learn how to batch ingest events into Imply Lumi using supported formats like JSON, CSV, and compression formats for backfills and data evaluation.

🗃️ Troubleshoot data ingestion

Learn how to view, analyze, and resolve ingestion errors in Imply Lumi to prevent data pollution and fix upstream issues with unparsable events.

📄️ Receivers reference

Learn how to use receivers in Imply Lumi to track data sources, filter events by ingestion integration, and analyze usage across your search requests.