Connect to Apache Kafka

To ingest data from Apache Kafka topics into Imply Polaris, create a Kafka connection and use it as the source of an ingestion job. Kafka connections support Amazon Managed Streaming for Apache Kafka (MSK).

Ingestion from Kafka to Polaris uses exactly once semantics.

Polaris authenticates with your Kafka cluster using Simple Authentication Security Layer (SASL) authentication. You can authenticate using a Kafka username and password, or, for MSK clusters that use IAM access control, with IAM role assumption. Regardless of authentication method, data in transit between Polaris and Kafka is encrypted with TLS.

This topic provides reference information to create a Kafka connection.

- For a Kafka connection to ingest from Amazon MSK, also refer to Connect to Amazon MSK.

- For a Kafka connection to ingest from Kafka on Azure Event Hubs, also refer to Connect to Kafka on Event Hubs.

Create a connection

Create a Kafka connection as follows:

- Click Sources from the left navigation menu.

- Click Create source and select Kafka/MSK.

- Enter the connection information.

- Click Test connection to confirm that the connection is successful.

- Click Create connection to create the connection.

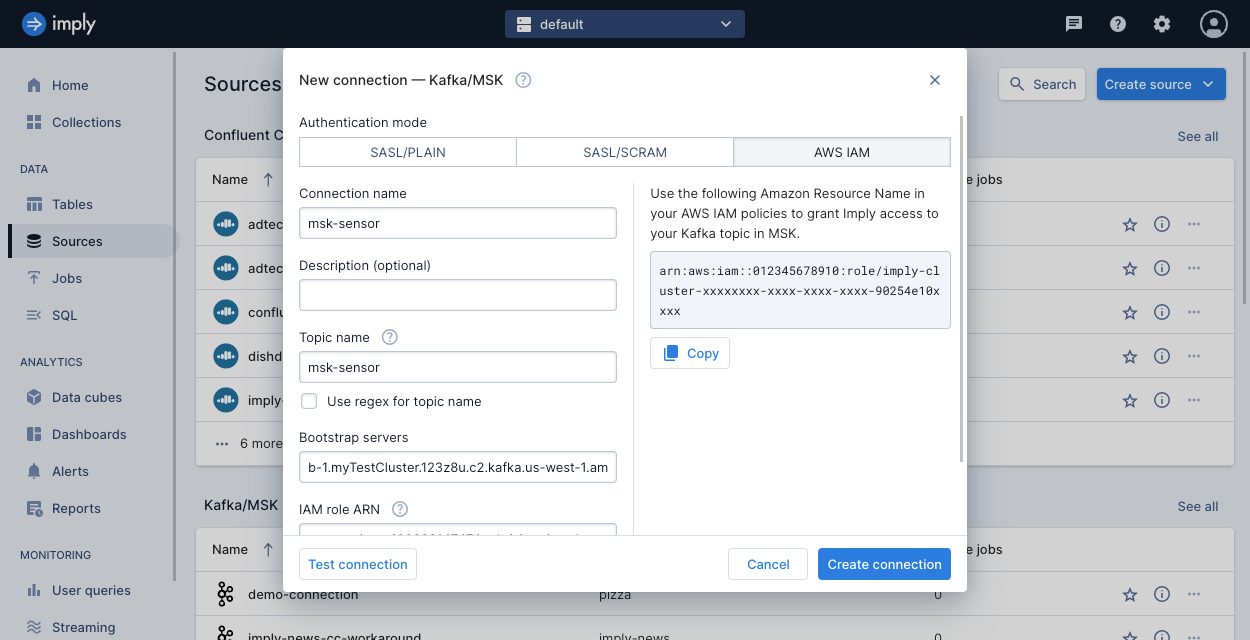

The following screenshot shows an example connection created in the UI. For more information, see Create a connection.

Connection information

Follow the steps in Create a connection to create the connection. The connection requires the following information from Kafka:

-

Topic name. The topic name or a regular expression that identifies the Kafka topics.

For details on creating a single connection that ingests from multiple Kafka topics, see Multiple topics per connection. -

Bootstrap servers. A list of one or more host and port pairs representing the addresses of brokers in the Kafka cluster. This list should be in the form

host1:port1,host2:port2,...

Authentication

Polaris requires a TLS-based connection to access your Kafka data. Provide authentication details using one of the following methods:

-

Apache Kafka username and password using Simple Authentication Security Layer (SASL). Select one of the following SASL mechanisms:

- SASL/PLAIN. Note that Apache Kafka with Azure Event Hubs only supports SASL/PLAIN.

- SASL/SCRAM. For SASL/SCRAM connections, you must also provide the SCRAM mechanism, either SCRAM-SHA-256 or SCRAM-SHA-512.

-

For MSK only, you can use IAM access control for the Kafka connection. For details, see Connect to Amazon MSK.

Advanced options

You can optionally set the following options in a Kafka connection:

-

Client rack: Kafka rack ID, if you want Kafka to connect to a broker matching a specific rack. For example,

use1-az4. -

Self-signed certificates

- Truststore certificates: One or more server certificates for Polaris to trust, based on your networking setup.

-

Mutual TLS (mTLS) authentication

- Keystore certificates: One or more TLS certificates for Polaris to trust, based on your networking setup.

- Private key and Private key password: Credentials to verify the certificates.

You can copy and paste certificates and the private key, for example:

-----BEGIN CERTIFICATE-----

xxxx

-----END CERTIFICATE-----

Learn more

To learn how to ingest data from Kafka or MSK using the Polaris API, see Ingest data from Kafka by API.

To include Kafka metadata with the ingestion job, see Ingest Kafka metadata.