Guide for Apache Kafka on Azure Event Hubs ingestion

This guide walks through the end-to-end process to ingest data into Imply Polaris from Apache Kafka on Azure Event Hubs. For information on creating the connection in Polaris, see Connect to Apache Kafka on Azure Event Hubs and Connect to Apache Kafka.

The following diagram summarizes the end-to-end process of connecting to your Event Hubs source and ingesting from it. Shaded boxes represent steps taken within Polaris, and unshaded boxes represent steps taken outside Polaris.

The screen captures and instructions in this guide show the configurations for Amazon Web Services (AWS) and Azure Event Hubs services in April 2024. They may not reflect the current state of the product.

Prerequisites

To complete the steps in this guide, you need the following:

-

An Event Hubs namespace with its Kafka surface set to enabled. This setting is applied by default to the standard pricing tier of Event Hubs. It is not supported by the basic tier.

-

An event hub within the namespace, containing data to ingest. See Supported formats for requirements on the data format for ingestion.

infoNote that setting up ingestion from Kafka on Event Hubs is more straightforward if you already have data in your Kafka topic. Add data to the topic before you follow the steps in this guide.

-

Permission in Event Hubs to create a shared access signature (SAS) policy with Listen privileges. See the Azure documentation on Authorizing access to Event Hubs resources using Shared Access Signatures.

-

Permissions in Polaris to create tables, connections, and ingestion jobs:

ManageTables,ManageConnections, andManageIngestionJobs, respectively. For more information on permissions, visit Permissions reference.

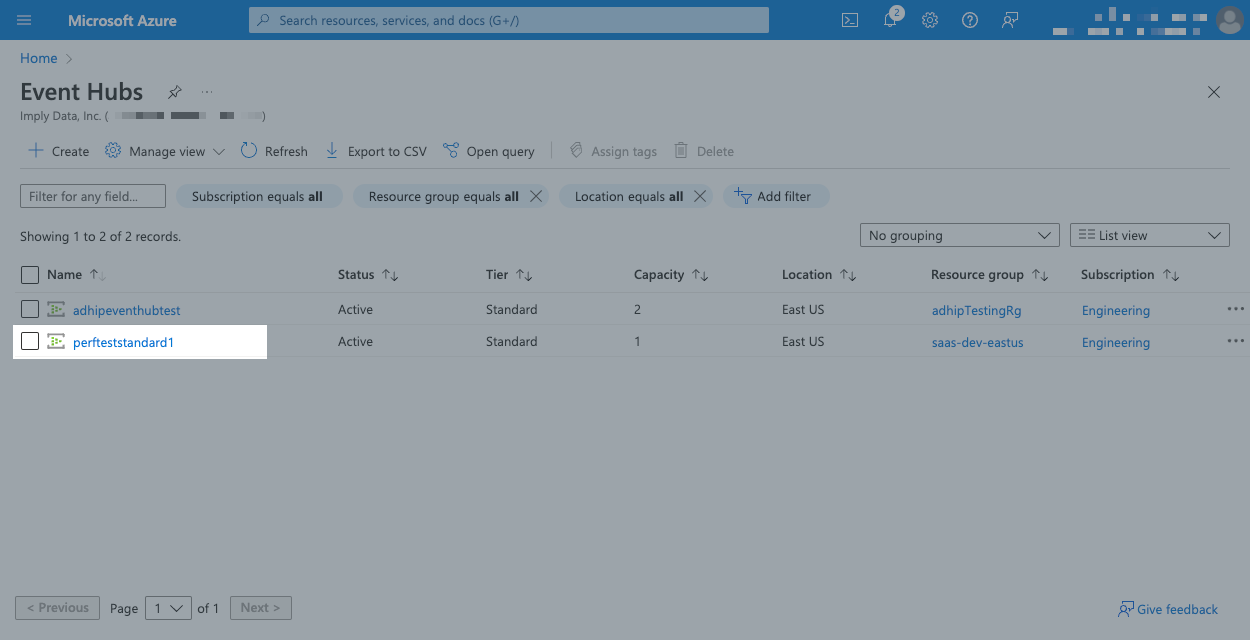

Get details from Azure

In this section, you record the name of the Azure namespace and event hub that Polaris will ingest data from.

In the Azure console:

-

Locate and click the Azure service named Event Hubs.

-

Find your namespace and click its name.

-

Copy and save the name of your Event Hubs namespace—you'll need it in a later step.

-

Click the name of your namespace.

-

Click Event Hubs in the left pane.

-

Make a note of your event hub topic name—you'll need it in a later step.

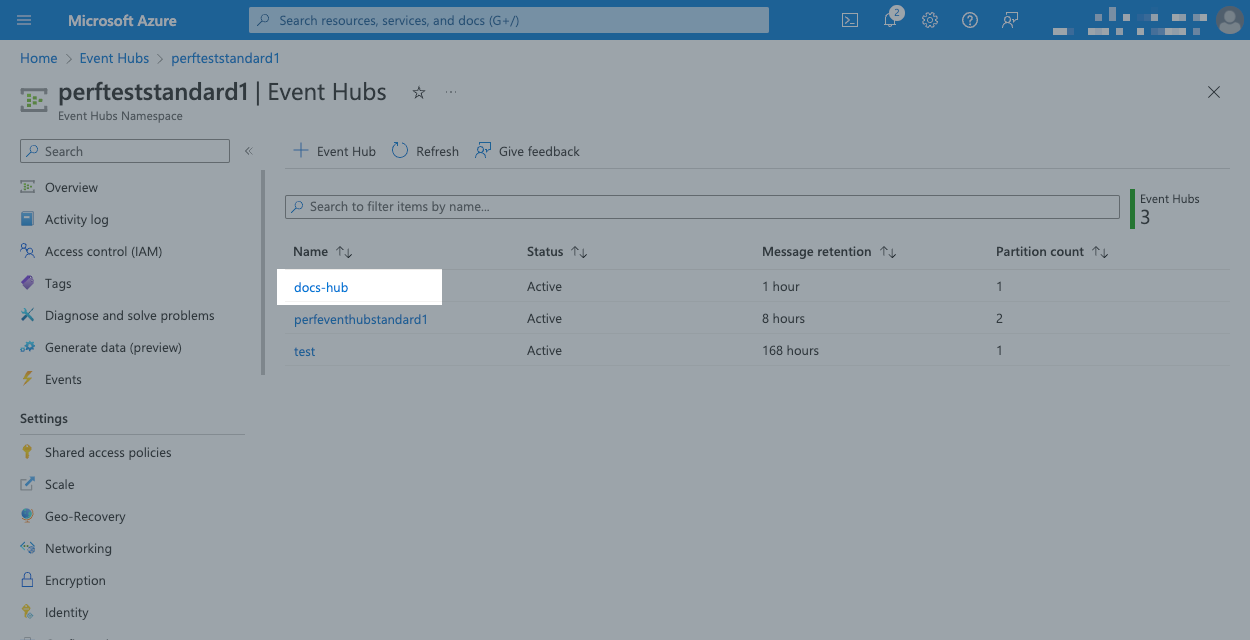

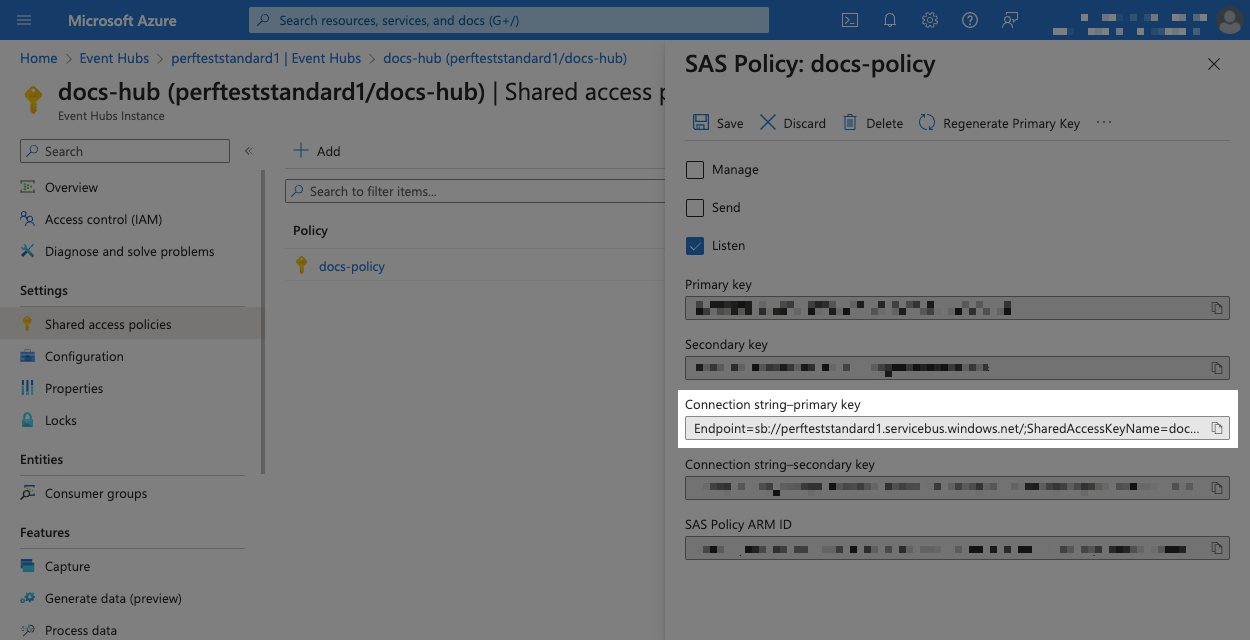

Set up authentication in Azure

In this section, you set up the SAS policy that Azure will use to connect to Polaris.

In the Azure console:

-

Find your Event Hubs namespace and click its name.

-

Click the name of your event hub.

-

Click Shared access policies in the left pane.

-

Create a policy, enter a name and select the Listen permission.

-

Click Create.

-

Once Azure has created the policy, click its name and copy the string in Connection string—primary key.

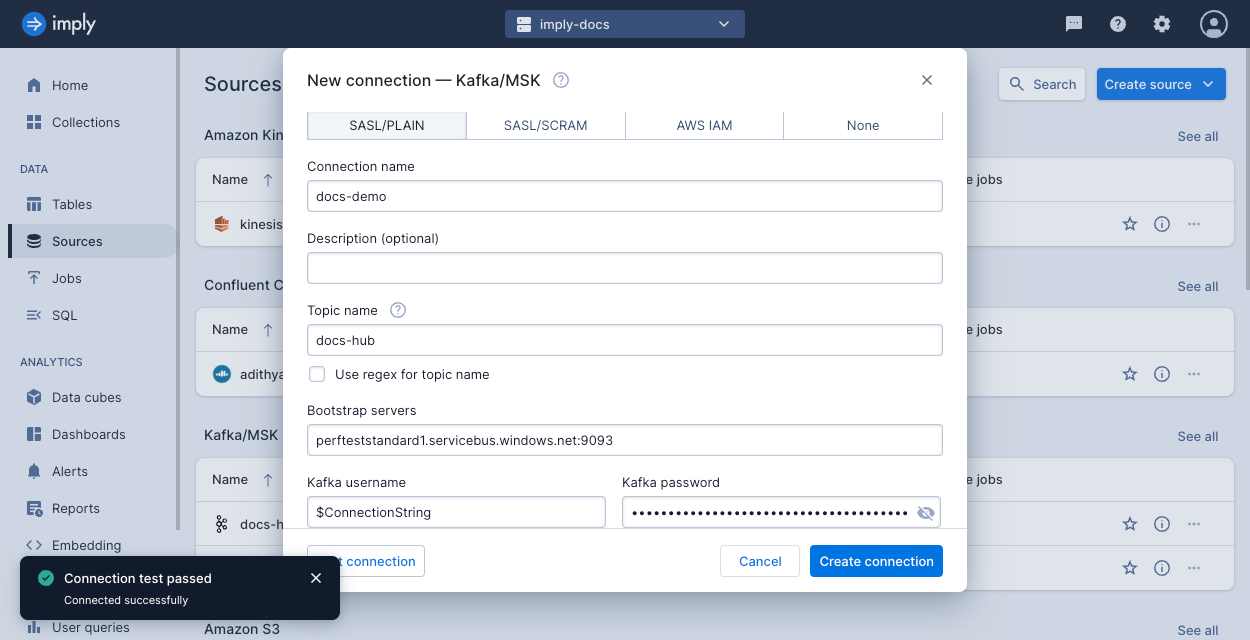

Create a Kafka connection

In this section, you create a Kafka connection in Polaris.

-

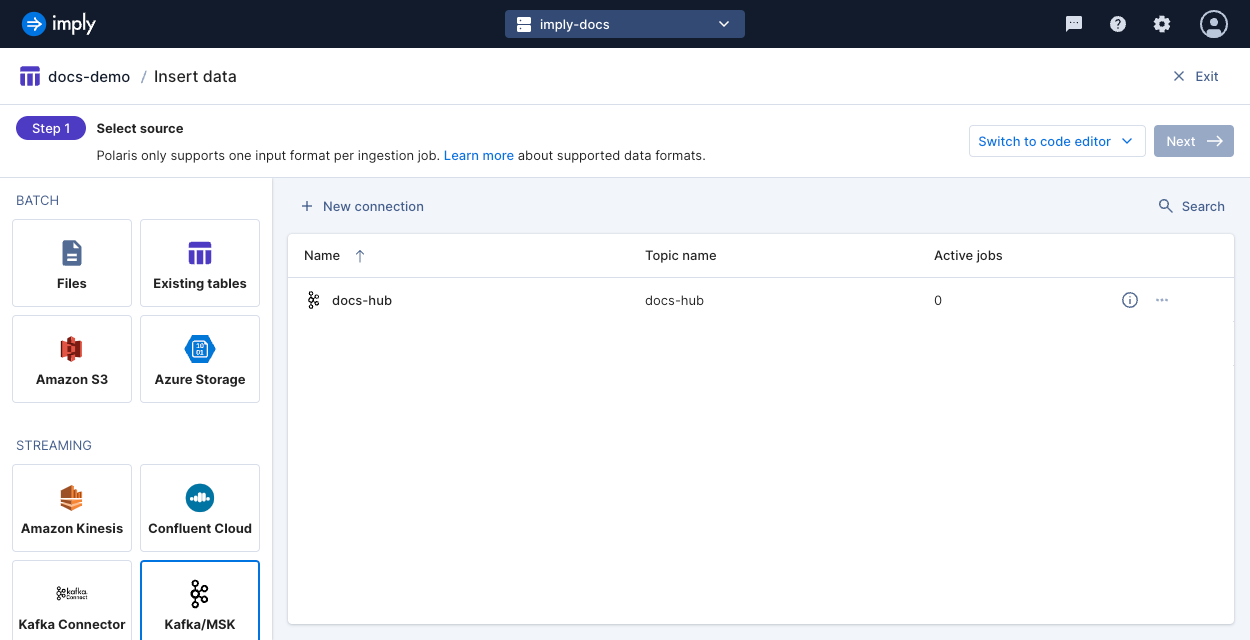

In Imply Polaris, go to Sources > Create source > Kafka/MSK.

-

Select the SASL/PLAIN authentication mode.

-

In the New connection dialog, enter the following details:

- Connection name: A unique name for your connection.

- Description: An optional description for the connection.

- Topic name: The event hub name you copied.

- Bootstrap servers: The following string—replace

NAMESPACENAMEwith the namespace name you copied:

NAMESPACENAME.servicebus.windows.net:9093 - Kafka username:

$ConnectionString - Kafka password: The connection string you copied from the shared access policy.

For more details on these fields and the advanced options, see Connect to Apache Kafka.

-

Click Test connection to ensure that Polaris can make a connection to the event hub.

Start an ingestion job

In this section, you create an ingestion job to add data from your Event Hub into a table in Polaris.

In this guide, Polaris automatically creates the table based on details in the job definition. For greater control on your table properties such as its partitioning or schema enforcement, create the table manually before starting your first ingestion job. For details, see Introduction to tables.

-

In Imply Polaris, go to Jobs > Create job > Insert data.

-

Click New table.

-

Enter a name for the table, and click Next.

-

Select the Kafka/MSK source, then the connection name, and click Next.

-

Verify the input format and fields in the parsed data and click Continue.

-

Continue through the load data wizard and configure your ingestion job based on your data and use case.

i. Polaris doesn't ingest data older than the late message rejection period (30 days by default). You can change this rejection period or add a rejection period to filter out events with timestamps in the future.

ii. The Starting offset setting determines what you can do with events already sent to the Event Hub:

- Beginning: Ingest all events as previewed as well as future events sent to the Event Hub.

- End: You can preview the events in the ingestion job but Polaris only ingests events you send to the Event Hub after the ingestion job begins.

-

Click Start ingestion to begin ingestion.

Learn more

See the following topics for more information:

- Connect to Apache Kafka on Azure Event Hubs for information on creating an Event Hubs connection in the Polaris UI.

- Connect to Apache Kafka for reference information on creating a Kafka connection.