Managing Imply clusters

An Imply cluster consists of the processes that make up Druid, including data servers, query servers, and master servers, along with Pivot.

The way you create and scale a cluster depends on how you run Imply. In an Imply Hybrid (formerly Imply Cloud) installation, you can add clusters from the Imply Manager home page, as described in Quickstart. Imply Manager users with the ManageClusters permission can create and configure clusters.

With Kubernetes, you can define the cluster to be created using a Helm chart. Servers are automatically added to the cluster when the Kubernetes scheduler creates new pods. For more information on cluster management with Kubernetes, see Deploy with Kubernetes.

Create a cluster

Imply Manager users with ManageClusters or AdministerClusters roles (for customers with cluster-level Auth enabled) can create clusters.

To create a cluster, follow these steps:

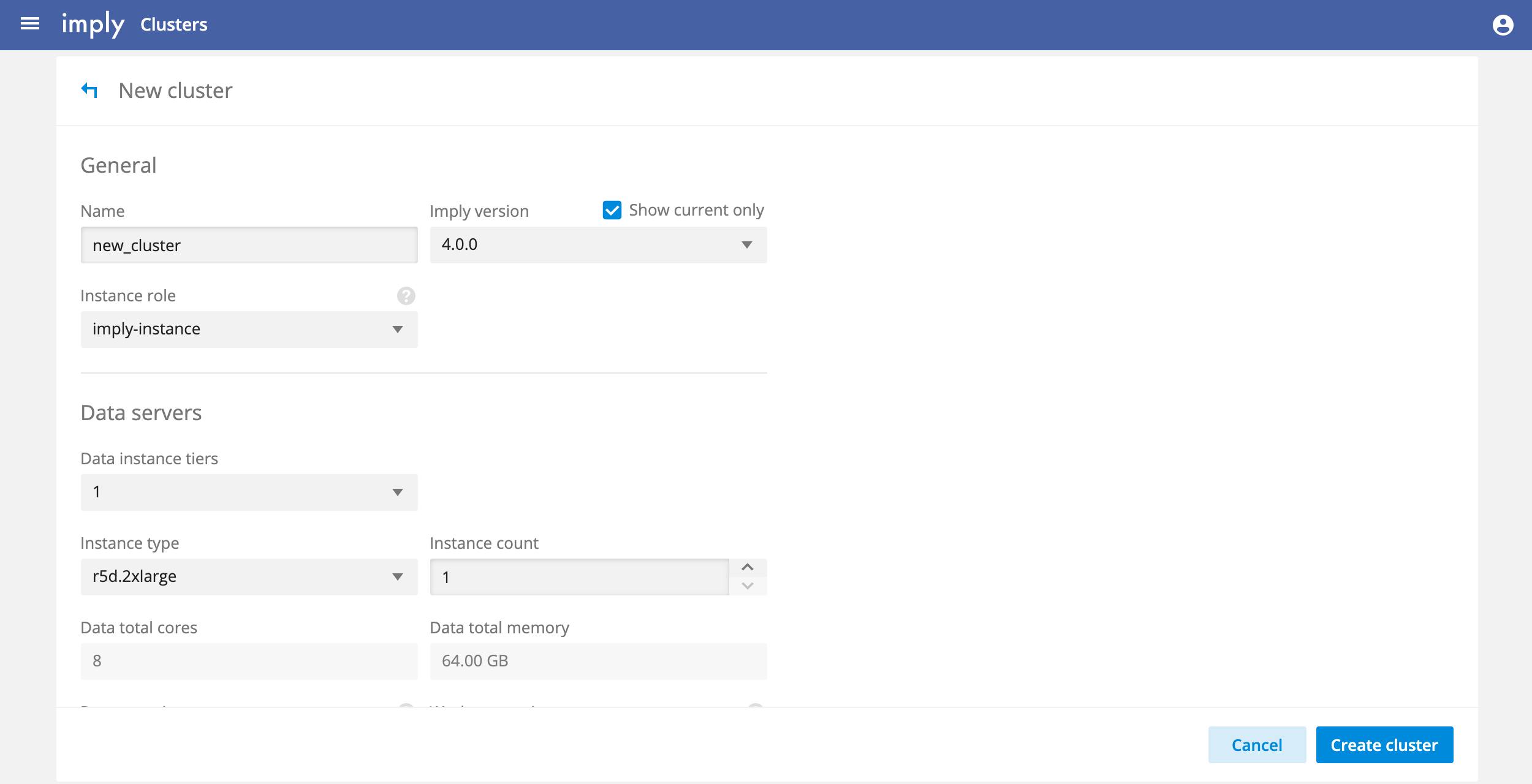

From the Imply Manager home page, click + New cluster. The new cluster configuration page appears.

In the General section, name your cluster and configure the general settings.

In the Data servers section, select an instance type for the cluster.

Review the default settings in the Query servers and Master servers sections. For a highly available cluster, you must have at least 3 master servers. For more high availability information, see Druid High availability.

Expand the Advanced config section. Default settings appear for Pivot, deep storage, and metadata storage. In Imply Hybrid deployments, metadata storage encryption is enabled by default.

You cannot modify ZooKeeper settings in Imply Hybrid. To be able to customize ZooKeeper settings, use the Imply Enterprise (formerly Imply Private) deployment option.

For Imply Enterprise, you can enter Druid server configuration settings in the Server properties fields. For available properties, see Druid configuration reference. In Imply Hybrid and GCP Enhanced, appropriate values are calculated at cluster creation based on your chosen instance types.

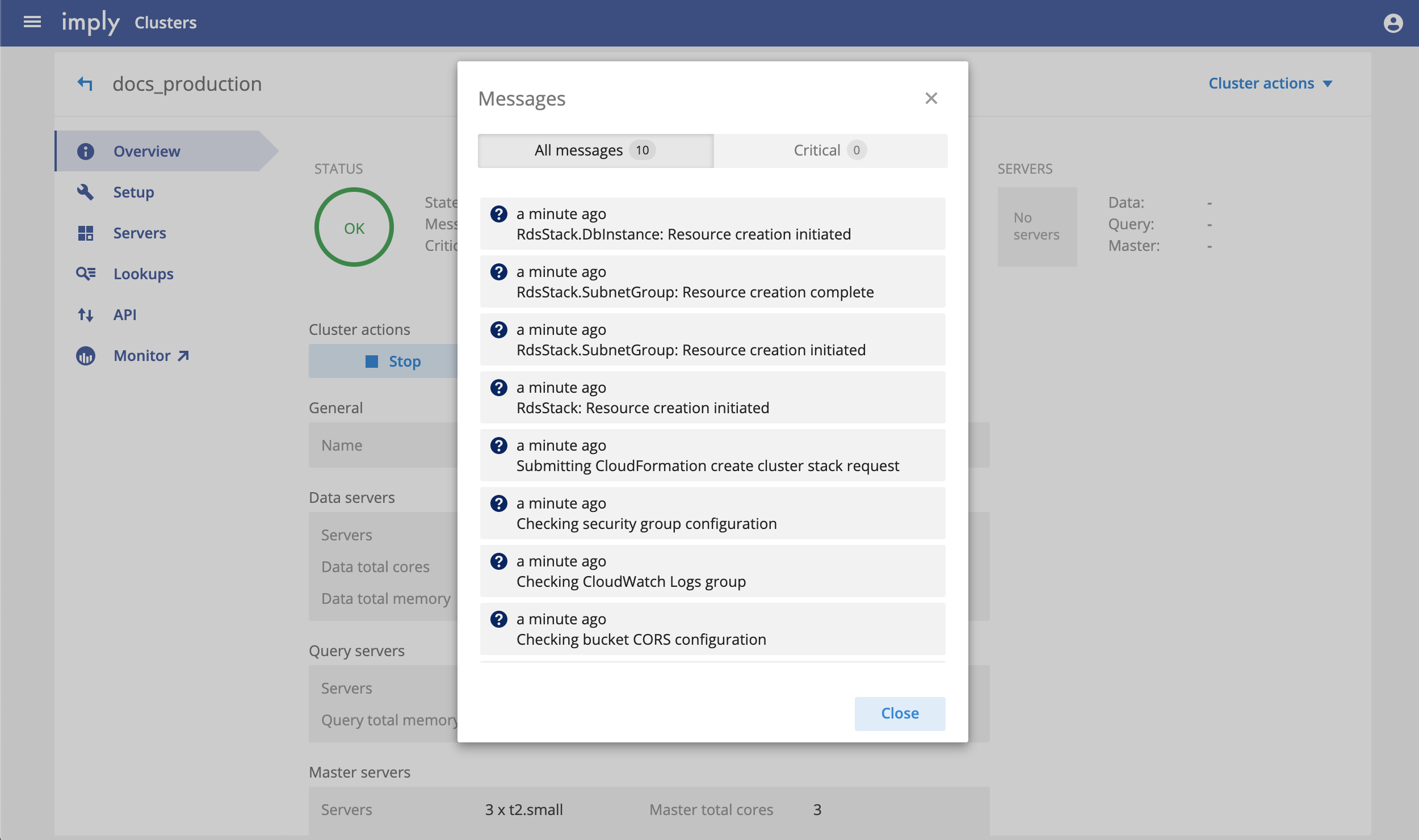

When finished, click Create cluster and confirm the operation to submit the cluster for creation. It may take fifteen to twenty minutes to start up resources for the cluster. You can track progress for the new cluster from the Overview page. For details, click the number next to the messages in the STATUS section of the page. The message log appears:

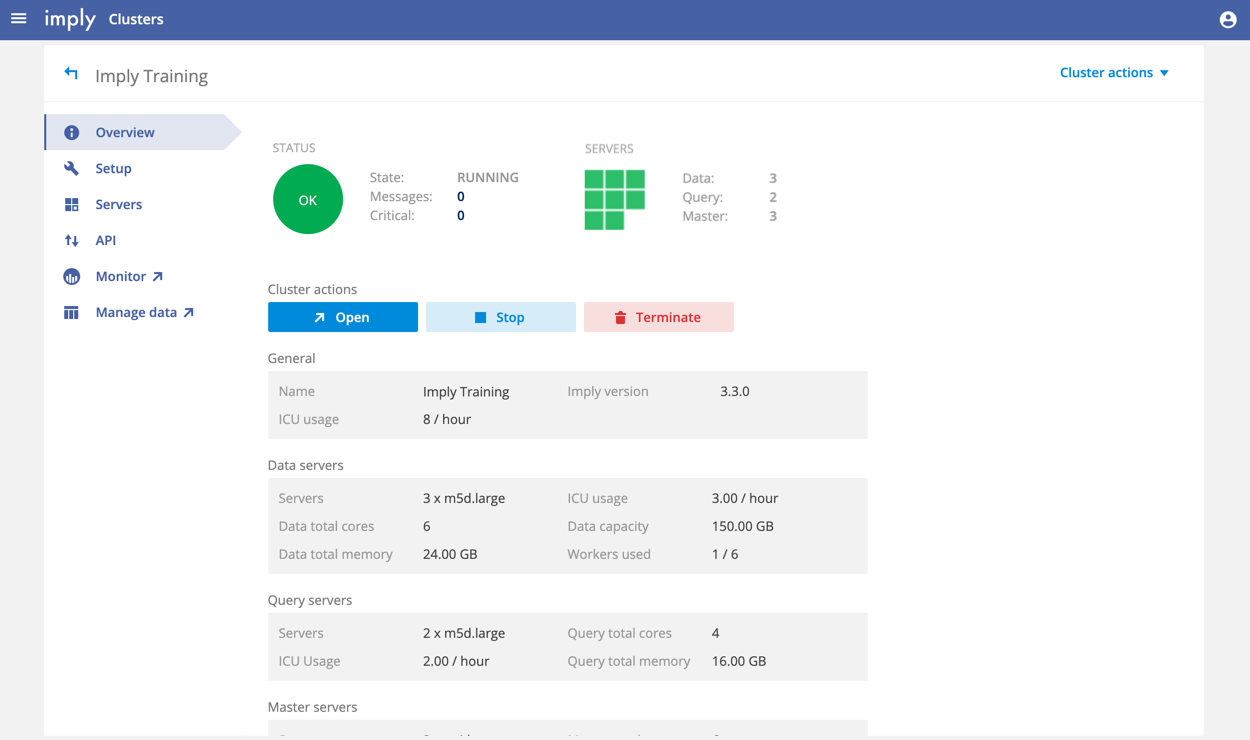

When the deployment is finished, Running appears next to the cluster state in the Overview page. See Viewing cluster status and capacity usage for more information about cluster status.

With Imply Hybrid, clusters are created behind the scenes by a set of Amazon CloudFormation scripts built for you by the Imply team. You shouldn't need to access or change the scripts manually, but it can be helpful to know of their operation, particularly if asked to access the CloudFormation scripts in your AWS account by Imply support in certain troubleshooting scenarios.

Also note that Imply Hybrid deploys servers across multiple availability zones (AZs) in a single region by default. This helps to ensure that the services are highly available.

Connect to your cluster

This section explains how to connect to your cluster.

Accessing Pivot

In Imply Hybrid and Enhanced GCP, users can access Pivot by clicking Open from the cluster actions menu found on the cluster's overview page.

On Imply Enterprise, users can access Pivot at http://localhost:9095.

Accessing Druid Web Console

Click Load Data in the Pivot window to open the Druid web console. See Quickstart for information on using the web console.

API Access

In Imply Hybrid and Enhanced GCP, to get API connection information for the cluster, from the respective cluster's overview page in the Imply Manager UI, click the API tab.

Note that if using Imply Hybrid or have deployed to a cloud host, these can only be accessed from within the VPC.

For Imply Enterprise deployments, see the Druid API reference for API access information.

View cluster status and capacity usage

The cluster overview page lets you view the status and health of the cluster at a glance. The top of the page shows the current status of the cluster and the server types that make up the cluster.

The disk utilization of the cluster appears in the Used column of the clusters list. The utilization value reflects the disk usage of the cluster, or more specifically, the segment cache usage compared to the segment cache size for the cluster as a whole. This information helps you determine when you need to scale the cluster or take other remediation actions.

In considering disk usage, note that a certain amount of disk space is reserved for internal Imply operations, and is therefore shown as occupied in the disk usage graph. Specifically, about 25 GB is reserved for purposes of temporary storage space for ingestion, heap dumps, log files, and for other internal processes.

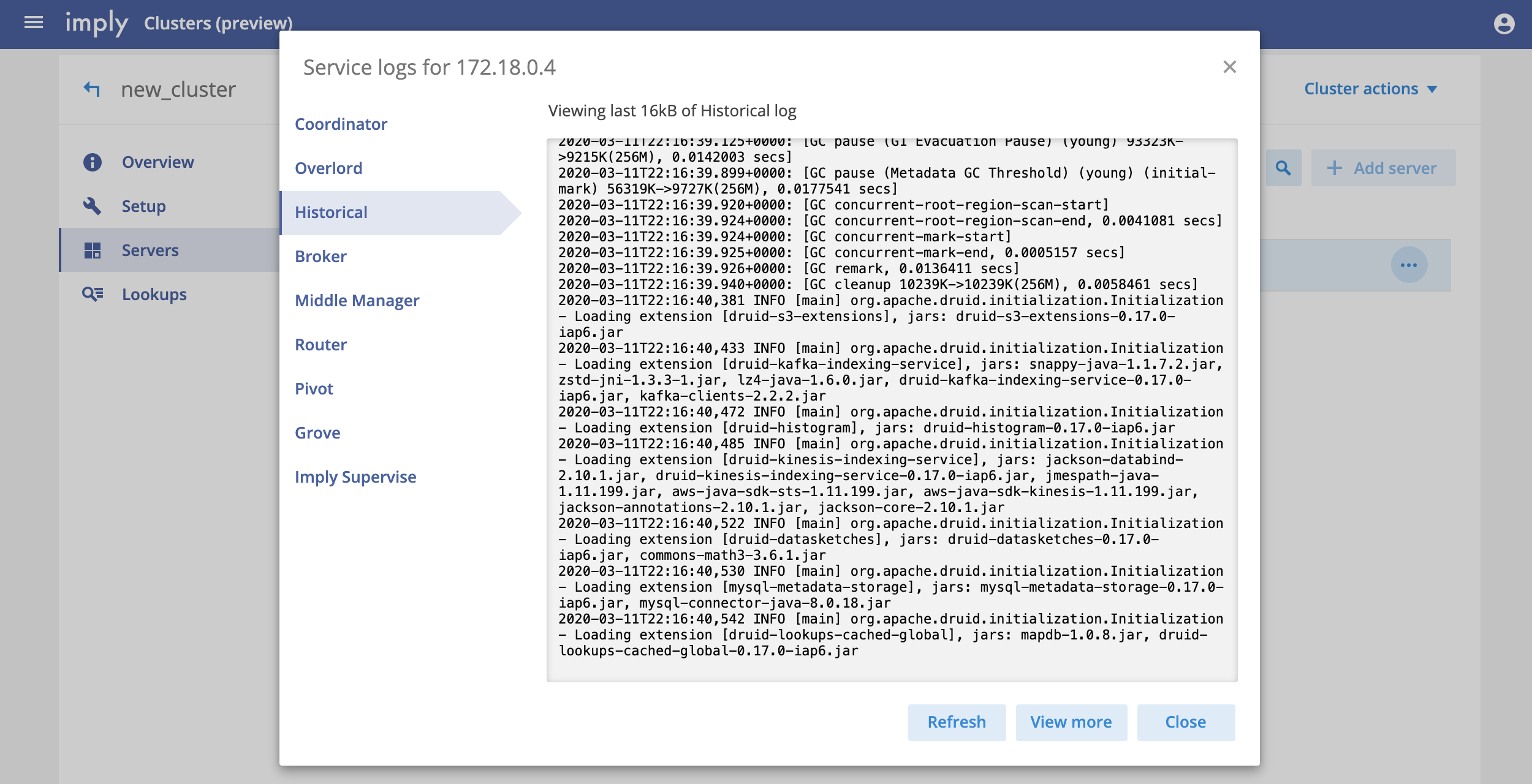

For more information about cluster status and operations, you can access the process logs from across the cluster from the Manager UI:

Scale a cluster

Imply clusters are scaled in two ways:

- Horizontally, in which additional servers are added to the cluster

- Vertically, in which servers with larger capacities replace existing servers

You can scale a cluster while it is running. When you scale horizontally, new instances are added without changes to the existing server instances.

In addition to adding more resources, you can also assign data nodes to specific tiers. For information about using tiers, see Druid multitenancy documentation.

Vertical scaling

When you scale vertically, new server instances are created to replace the existing instances, which are then terminated, but only after ingestion has completed on that instance. Scaling is complete when the data segment replicants exist on the new instances as well.

Horizontal scaling

Horizontally scaling clusters typically means adding data servers. To scale clusters horizontally, from the Setup page of the cluster, increase the Instance count for the servers in the cluster. Click Apply changes.

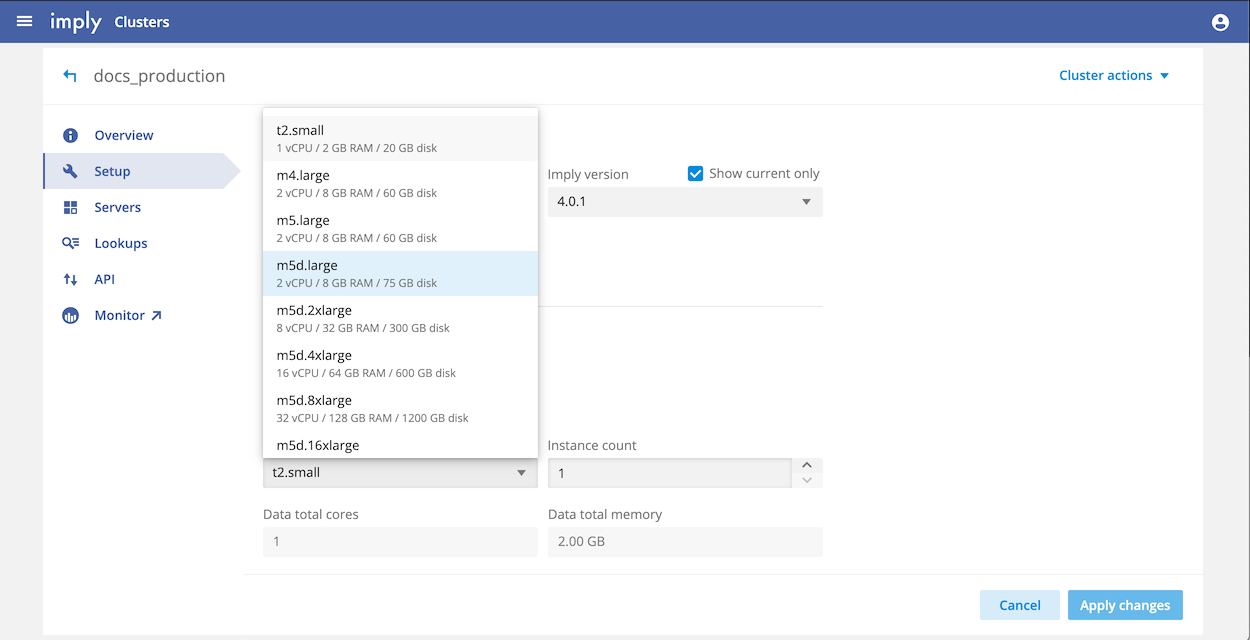

You can also change the server specs of the servers in the cluster. The following figure, for example, shows the upgrade options for AWS.

Choose the new instance types and click the Apply changes button. Before any changes are made, the manager UI informs you of the type of update that will be made.

After confirming the change, you will be brought to the cluster overview page, where you can monitor the progress of the update. Click the Changes button to see the list of changes being made in this update.

When the update finishes successfully, the cluster state shows as RUNNING.

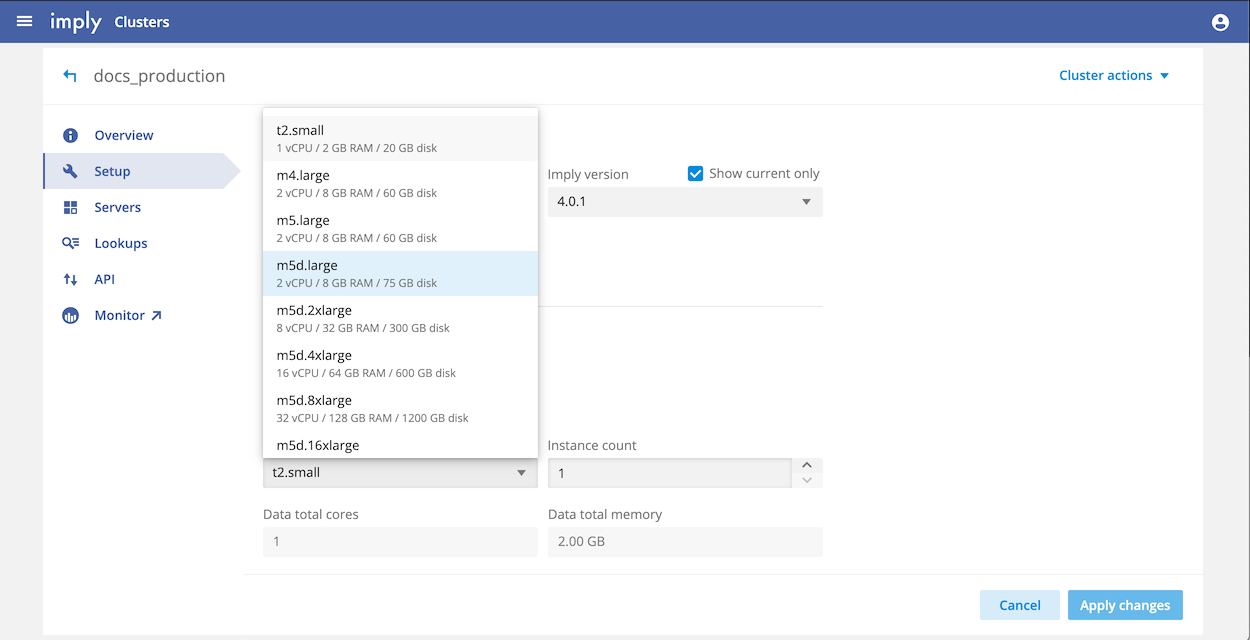

You can also change the server specs of the servers in the cluster. The following figure, for example, shows the upgrade options for AWS.

Choose the new instance type and click Apply changes. Before any changes are made, the Manager UI informs you of the type of update that will be made.

After you confirm your change, you return to the cluster overview page where you can monitor the progress of the update. To see the list of changes implemented in this update, click Changes.

When the update finishes successfully, the cluster state changes to RUNNING.

Additional data tiers

You can increase the number of data tiers in your deployment. By default, you can have three data tiers. Enabling data tiers beyond three depends on how you deployed your Imply instance:

- GKE Enhanced: You can have up to 40 data tiers total. Configure them like your existing data tiers using the Data instance tiers dropdown on the Setup page in Imply Manager.

- Kubernetes deployments: Requires version 9 or later of the agent. Verify the version in the values file for the Helm chart:

images.agent.tag. You can have any number of data tiers. For information about adding tiers, see Adding additional data tiers. - Linux deployments: Requires version 6 or later of the agent. You can have any number of data tiers. For information about adding data tiers, see Install agents.

Stop a cluster

To stop a cluster, as an Imply Manager user with the ManageClusters permission, navigate to the corresponding cluster's overview page and click the Stop button. You will be prompted to confirm the operation.

Once the operation completes, the cluster state shows as STOPPED.

In Imply Hybrid, stopping a cluster terminates all cluster infrastructure, including EC2 instances, auto-scaling groups, load balancers, and RDS instances. A snapshot of the database gets taken and is automatically restored when the cluster is started again. Data stored in S3 and shared networking resources, such as VPCs, subnets, and security groups, are not affected by stopping a cluster.

The following shared resources are not terminated:

- VPC

- Subnets

- Internet gateway

- Security groups

You can start the cluster again from the overview page by pressing the Start button.

Terminating a cluster

Terminating a cluster stops and tears down the cluster. Once a cluster is terminated, it cannot be used again. The data on the cluster, along with data cubes and dashboards, are permanently removed.

To terminate a cluster, as an Imply Manager user with the ManageClusters permission, navigate to the corresponding cluster's overview page and click the Terminate button. You will be prompted to confirm the operation.

Once the operation completes, the cluster state shows as TERMINATED.

Configure extensions

You can see which Druid extensions are already included for your cluster, along with those that are available to be enabled, in the Druid extensions dialog. To do so, from the Advanced config section of the cluster setup page, click the edit icon next to the Druid extensions field.

At the top of the dialog box are names of the Druid extensions already included. Click a name for more information on that extension.

See Imply Cluster Extensions and Extensions in the Apache Druid documentation for more information on extensions.

Server properties

You can configure server properties from the cluster setup page. Configurable properties include the JVM configuration settings for the service, such as the maximum memory allocation pool, minimum memory allocation pool, maximum direct memory size, and so on.

For the default settings, see Default server properties.

Set server properties

To set properties, follow these steps:

- From the Imply Manager home, click the Manage button next to the cluster you want to configure.

- Click the Setup tab.

- Scroll to the bottom of the page and expand the Advanced config section.

- Under Service properties, add the property and new value in the text field for the desired service in the format of these examples: The configuration settings you enter override the default settings that appear in the following section.

jvm.config.xms=-Xms300m

jvm.config.xmx=-Xmx300m

jvm.config.MaxDirectMemorySize=-XX:MaxDirectMemorySize=200000m - Click Apply changes.

Default server properties

The default values are intended for running a quickstart cluster on a moderately powerful desktop or laptop; they are not intended for production or large scale testing systems.

This section lists the default service settings for the cluster if you created using Docker for Imply Manager. They are provided here for reference purposes or if you need to set your cluster to its original state. (Note that if you generated your cluster through a Helm chart with Kubernetes, the default values are specified in the Helm file.)

When you apply any server property changes, an update (rolling or otherwise) is triggered; you'll have to proceed with the update to apply the changes.

Coordinator

jvm.config.xms=-Xms128m

jvm.config.xmx=-Xmx128mOverlord

jvm.config.xms=-Xms128m

jvm.config.xmx=-Xmx128mBroker

jvm.config.xms=-Xms256m

jvm.config.xmx=-Xmx256m

druid.processing.buffer.sizeBytes=50000000

druid.processing.numThreads=1Router

jvm.config.xms=-Xms64m

jvm.config.xmx=-Xmx64mHistorical

jvm.config.xms=-Xms256m

jvm.config.xmx=-Xmx256m

druid.processing.buffer.sizeBytes=50000000

druid.processing.numThreads=1

druid.segmentCache.locations=[{"path":"/mnt/var/druid/segment-cache","maxSize":10000000000}]

druid.server.maxSize=10000000000

druid.historical.cache.useCache=true

druid.historical.cache.populateCache=true

druid.cache.sizeInBytes=50000000MiddleManager

jvm.config.xms=-Xms64m

jvm.config.xmx=-Xmx64m

druid.worker.capacity=1

druid.indexer.runner.javaOpts=-server -Xmx2g -Duser.timezone=UTC -Dfile.encoding=UTF-8 -XX:MaxDirectMemorySize=3g -XX:+PrintGC -XX:+PrintGCDateStamps -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError -Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager -Daws.region=us-east-1

druid.processing.buffer.sizeBytes=50000000

druid.processing.numThreads=1