Docker

In this quickstart, we will download the Apache Druid image from Docker Hub and set it up on a single machine using Docker and Docker Compose. The cluster will be ready to load data after completing this initial setup.

Before beginning the quickstart, it is helpful to read the general Druid overview and the ingestion overview, as the tutorials will refer to concepts discussed on those pages. Additionally, familiarity with Docker is recommended.

Prerequisites

- Docker

Getting started

The Druid source code contains an example docker-compose.yml which can pull an image from Docker Hub and is suited to be used as an example environment and to experiment with Docker based Druid configuration and deployments.

Compose file

The example docker-compose.yml will create a container for each Druid service, as well as Zookeeper and a PostgreSQL container as the metadata store. Deep storage will be a local directory, by default configured as ./storage relative to your docker-compose.yml file, and will be mounted as /opt/data and shared between Druid containers which require access to deep storage. The Druid containers are configured via an environment file.

Configuration

Configuration of the Druid Docker container is done via environment variables, which may additionally specify paths to the standard Druid configuration files

Special environment variables:

JAVA_OPTS-- set java optionsDRUID_LOG4J-- set the entirelog4j.xmlverbatimDRUID_LOG_LEVEL-- override the default log level in default log4jDRUID_XMX-- set JavaXmxDRUID_XMS-- set JavaXmsDRUID_MAXNEWSIZE-- set Java max new sizeDRUID_NEWSIZE-- set Java new sizeDRUID_MAXDIRECTMEMORYSIZE-- set Java max direct memory sizeDRUID_CONFIG_COMMON-- full path to a file for druid 'common' propertiesDRUID_CONFIG_${service}-- full path to a file for druid 'service' properties

In addition to the special environment variables, the script which launches Druid in the container will also attempt to use any environment variable starting with the druid_ prefix as a command-line configuration. For example, an environment variable

druid_metadata_storage_type=postgresql

would be translated into

-Ddruid.metadata.storage.type=postgresql

for the Druid process in the container.

The Druid docker-compose.yml example utilizes a single environment file to specify the complete Druid configuration; however, in production use cases we suggest using either DRUID_COMMON_CONFIG and DRUID_CONFIG_${service} or specially tailored, service-specific environment files.

Launching the cluster

Run docker-compose up to launch the cluster with a shell attached, or docker-compose up -d to run the cluster in the background. If using the example files directly, this command should be run from distribution/docker/ in your Druid installation directory.

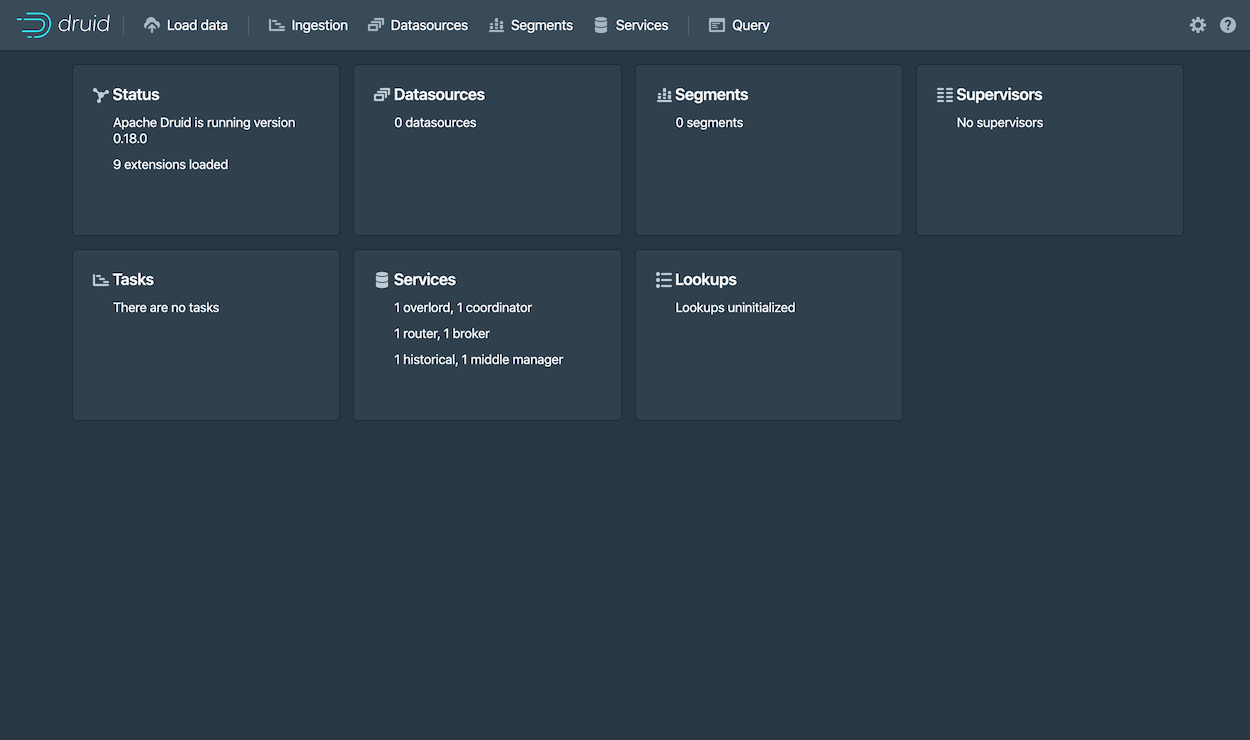

Once the cluster has started, you can navigate to http://localhost:8888. The Druid router process, which serves the Druid console, resides at this address.

It takes a few seconds for all the Druid processes to fully start up. If you open the console immediately after starting the services, you may see some errors that you can safely ignore.

From here you can follow along with the Quickstart, or elaborate on your docker-compose.yml to add any additional external service dependencies as necessary.

Docker Memory Requirements

If you experience any processes crashing with a 137 error code you likely don't have enough memory allocated to Docker. 6 GB may be a good place to start.