Release notes

2022.10

The multi-stage query task engine became available as part of the Druid project starting with Druid 24.0. For information about this release and subsequent ones, see the Druid section of the Imply release notes.

2022.09

- The extension name for the multi-stage query (MSQ) task engine is now named

druid-multi-stage-query. Previously, it was namedimply-talaria. - The

numTasks(msqNumTasks) property is no longer available. As described in the 2022.07 release notes, usemaxNumTasksinstead. - Imply Hybrid now loads the MSQ task engine as an extension instead of a feature flag. If you are upgrading from a previous release and have the feature flag enabled, no action is needed. In new deployments, use the extension.

2022.08

- The Query view in the UI now includes improved demo queries that you can load to learn more about the SQL-task engine is capable of. To load the queries, go to ... > Load demo queries (replaces current tabs).

- The Connect external data wizard now automatically detects the primary time column for data you are trying to ingest.

- Context parameters for the SQL-task engine have changed:

- They no longer include

msqat the start. For example,msqMaxNumTasksis nowmaxNumTasks. - For the API, update any existing queries that include context parameters.

- For the UI, you now specify context parameters through various UI options. You no longer submit them as a comment at the start of a query. For example, to set the

maxNumTaskscontext parameter through the UI, use the Max tasks menu.

- They no longer include

- There are now includes additional guardrails for column names. If your query includes columns with restricted names that aren't being transformed, you'll encounter a

ColumnNameRestrictedFaulterror. - Fixed an issue where query reports included duplicate fields.

2022.07

- There are two new context parameters:

msqMaxNumTasksreplaces tomsqNumTasks. This property specifies the maximum number of tasks to launch, including the controller task . When both are present,msqMaxNumTaskstakes precedence. The default value is2, which is the lowest supported number of tasks: one controller and one worker.msqNumTaskswill be removed in a future release. You can set this proprety in the console with the Max tasks option or include it as context parameter.msqTaskAssignmentdetermines how the number of tasks is chosen. You can set it to eithermaxwhere MSQ uses as many tasks as possible (up to the limit set bymsqMaxNumTasks) orautowhere MSQ uses as few tasks as possible without exceeding 10 Gib or 10,000 files per task.

- The maximum number of tasks control is now more prominently displayed near the Run button in the web console.

- MSQ now shows the following errors:

- An

InvalidNullByteerror if a string column includes a null byte, which is not allowed. - An

InsertTimeNullerror if an INSERT or REPLACE query contains a null timestamp.

- An

- Improved how the web console detects MSQ. The sql-task engine for MSQ is only available if the MSQ extension is configured correctly.

- The Connect external data wizard now auto detects columns that are suitable to be parsed as the primary timestamp column.

- The web console can now recover from network errors / connectivity issues while running a query.

- You can now open a query detail archive generated in another cluster.

- Improved the counters. There is now one counter per input. The counters now contain more detail, are sorted, and are rendered as a table.

- Improved reporting for task cancellations and worker errors.

- Fixed the following bugs:

- Moving from one tab to another canceled a

nativeorsqlquery if one was already running. - Counters reset to 0 under certain crash conditions.

- Certain errors were being reported incorrectly (for example invalid "Access key ID" in an S3 input source).

- The shuffle mesh used an excessive number of threads. Communication now happens in chunks, so you no longer need to set.

druid.server.http.numThreadsto a very high number. - While using durable storage, if a worker gets killed due to any reason, the controller will still clean up the stage outputs of all the workers as a fail-safe.

- Moving from one tab to another canceled a

- The location of some of the documentation has changed. You may need to update your bookmarks. Additionally, the Multi-Stage Query Engine (MSQE) is now referred to as the Multi-Stage Query (MSQ) Framework. You may still see some references to MSQE.

2022.06

Changes and improvements

- Property names have changed. Previously, properties used the

talariaprefix. They now either usemsqormultiStageQuerydepending on the context. Note that the extension is still namedimply-talaria. - You no longer need a license that has an explicit entitlement for MSQE.

- Reports for MSQE queries now include warning sections that provide information about the type of warning and number of occurrences.

- You can now use REPLACE in MSQE queries. For more information, see REPLACE.

- The default mode for MSQE is now

strict. This means that a query fails if there is a single malformed record. You can change this behavior using the context variablemsqMode(previouslytalariaMode). - The behavior of

msqNumTasks(previouslytalariaNumTasks) has changed. It now accurately reflects the total number of slots required, including the controller. Previously, the controller was not accounted for. - The EXTERN operator now requires READ access to the resource type EXTERNAL that is named EXTERNAL. This new permission must be added manually to API users who issue queries that access external data. In Imply Enterprise Hybrid and in Imply Enterprise, this permission is added automatically to users with the ManageDatasets permission. For Imply Enterprise, you must update Imply Manager to 2022.06 prior to updating your Imply version to 2022.06.

- Queries that carry large amounts of data through multiple stages are sped up significantly due to performance enhancements in sorting and shuffling.

- Queries that use GROUP BY followed by ORDER BY, PARTITIONED BY, or CLUSTERED BY execute with one less stage, improving performance.

- Strings are no longer permitted to contain null bytes. Strings containing null bytes will result in an InvalidNullByte error.

- INSERT or REPLACE queries with null values for

__timenow exit with an InsertTimeNull error. - INSERT or REPLACE queries that are aborted due to lock preemption now exit with an InsertLockPreempted error. Previously, they would exit with an UnknownError.

- MSQE generated segments can now be used with Pivot datacubes and support auto-compaction if the query conforms to the conditions defined in GROUP BY.

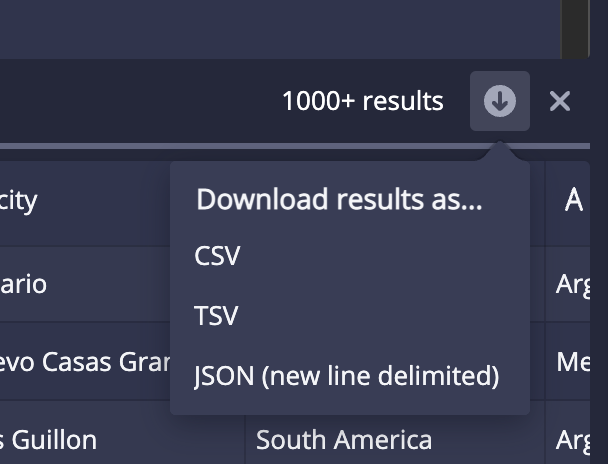

- You an now download the results of a query as a CSV, TSV, JSON file. Click the download icon in the Query view after you run a query:

.

.

Fixes

- Fixed an issue where the controller task would stall indefinitely when its worker tasks could not all start up. Now, the controller task exits with a TaskStartTimeout after ten minutes.

- Fixed an issue where the controller task would exit with an UnknownError when canceled. Now, the controller task correctly exits with a Canceled error.

- Fixed an issue where error message when

groupByEnableMultiValueUnnesting: falseis set in the context of a query that uses GROUP BY referred to the parameterexecutingNestedQuery. It now uses the correct parametergroupByEnableMultiValueUnnesting. - Fixed an issue where the true error would sometimes be shadowed by a WorkerFailed error. (20466)

- Fixed an issue where tasks would not retry certain retryable Overlord API errors. Now, these errors are retried, improving reliability.

2022.05

- You no longer need to load the

imply-sql-asyncextension to use the Multi-Stage Query Engine. You only need to load theimply-talariaextension. - The API endpoints have changed. Earlier versions of the Multi-Stage Query Engine used the

/druid/v2/sql/async/endpoint. The engine now uses different endpoints based on what you're trying to do:/druid/v2/sql/taskand/druid/indexer/v1/task/. For more information, see API. - You no longer need to set a context parameter for

talariawhen making API calls. API calls to thetaskendpoint use the Multi-Stage Query Engine automatically. - Fixed an issue that caused an

IndexOutOfBoundsExceptionerror to occur, which led to some ingestion jobs failing.

2022.04

- Stage outputs are now removed from local disk when no longer needed. This reduces the total amount of local disk space required by jobs with more than two stages. (15030)

- It is now possible to control segment sort order independently of CLUSTERED BY, using the

new

talariaSegmentSortOrdercontext parameter. (18320) - There is now a guardrail on the maximum number of input files. Exceeded this limit leads to a TooManyInputFiles) error. (15020)

- Queries now report the error code WorkerRpcFailed) when controller-to-worker or worker-to-worker communication fails. Previously, this would be reported as an UnknownError. (18971)

- Fixed an issue where queries with large numbers of partitions could run out of memory. (19162)

- Fixed an issue where worker errors were sometimes hidden by controller errors that cascaded from those worker failures. Now, the original worker error is preferred. (18971)

- Fixed an issue where worker errors were incompletely logged in worker task logs. (18964)

- Fixed an issue where workers could sometimes fail soon after startup with a

NoSuchElementException. (19048) - Fixed an issue where worker tasks sometimes continued running after controller failures. (18052)

2022.03

- The web console now includes counters for external input rows and files, Druid table input rows and segments, and sort progress. As part of this change, the query detail response format has changed. Clients performing programmatic access will need to be updated. (15048, 15208, 18070)

- Added the ability to avoid unnesting multi-value string dimensions during GROUP BY. This is useful for performing ingestion with rollup. (15031, 16875, 16887)

- EXPLAIN PLAN FOR now works properly on INSERT queries. (17321)

- External input files are now read in parallel when running in Indexers. (17933)

- Improved accuracy of partition-determination. Segments generated by INSERT are now more regularly sized. (17867)

- CannotParseExternalData error reports now include input file path and line number information. (16016)

- There is now an upper limit on the number of workers, partially determined by available memory. Exceeding this limit leads to a TooManyWorkers error. (15021)

- There is now a guardrail on the maximum size of data involved in a broadcast join. Queries that exceed the limit will report a BroadcastTablesTooLarge error code. (15024)

- When a worker fails abruptly, the controller now reports a WorkerTaskFailed error code instead of UnknownError. (15024)

- Controllers will no longer give up on workers before the Overlord does. Previously, the controller would fail with the message "Connection refused" if workers took longer than 30 seconds to start up. (17602)

- Fixed an issue where INSERT queries that generate large numbers of time chunks may fail with a

message containing "SketchesArgumentException: K must be

>=2 and<=32768 and a power of 2". This happened when the number of generated time chunks was close to the TooManyBuckets limit. (14764) - Fixed an issue where queries with certain input sources would report an error with the message "Too many workers" when there were more files than workers. (18022)

- Fixed an issue where SELECT queries with LIMIT would sometimes return more rows than intended. (17394)

- Fixed an issue where workers could intermittently fail with an UnknownError with the message "Invalid midstream marker". (17602)

- Fixed an issue where workers could run out of memory when connecting to large numbers of other workers. (16153)

- Fixed an issue where workers could run out of memory during GROUP BY of large external input files. (17781)

- Fixed an issue where workers could retry reading the same external input file repeatedly and never succeed. (17936, 18009)

2022.02

- INSERT uses PARTITIONED BY and CLUSTERED BY instead of talariaSegmentGranularity and ORDER BY. (15045)

- INSERT validates the datasource name at planning time instead of execution time. (15038)

- SELECT queries support OFFSET. (15000)

- The "Connect external data" feature in the Query view of the web console correctly supports Parquet files. Previously, this feature would report a "ParseException: Incorrect Regex" error on Parquet files. (16197)

- The Query detail API includes startTime and durationMs for the whole query. (15046)

2022.01

- Multi-stage queries can now be issued using the async query API provided by the

imply-sql-asyncextension. (15014) - New

talariaFinalizeAggregationsparameter may be set to false to cause queries to emit nonfinalized aggregation results. (15010) - INSERT queries obtain minimally-sized locks rather than locking the entire target datasource. (15003)

- The Query view of the web console now has tabs and an engine selector that allows issuing multi-stage queries. The dedicated "Talaria" view has been removed.

- The web console includes an ingestion spec conversion tool. It performs a best-effort conversion of a native batch ingestion spec into a SQL query. It does not guarantee perfect fidelity, so we recommend that you review the generated SQL query before running it.

- INSERT queries with LIMIT and

talariaSegmentGranularityset to "all" now execute properly and write a single segment to the target datasource. Previously, these queries would fail. (15051) - INSERT queries using

sqlReplaceTimeChunksnow always produce "range" shard specs when appropriate. Previously, "numbered" shard specs would sometimes be produced instead of "range" shard specs as documented. (14768)

2021.12

- Initial release.